Microsoft SQL Server is a relational database management system (RMDBS) and has been the leader in Gartner's Magic Quadrant (MQ) for the last few years.

Microsoft markets at least a dozen different editions of SQL Server, aimed at various audiences and workloads - ranging from small single-machine applications to large internet-facing applications with many concurrent users.

Despite being one of the more expensive products in the Microsoft portfolio, a lot of businesses use SQL Server to run the back end databases for their business critical systems - which is no surprise given its recurring MQ leadership status.

Traditionally, IT departments and software vendors would recommend that SQL Servers were built with as much memory as the server could physically hold, and as many cores as they could get in two CPUs.

Licensing Model Change

Then SQL Server 2012 arrived and Microsoft changed the licensing model from physical CPUs to cores of a physical CPU - forcing a change in the way resources are specified for a SQL Server. Instead of cramming the server with lots of cores over two CPUs, IT were trying to find the best way of achieving performance with fewer cores to reduce the expense of licensing.

Nearly every person who has ever had to look after a SQL Server knows that it’s a hungry gluttonous beast when it comes to compute resources (CPU, memory and disk). Left unchecked, a SQL Server will consume memory until there is nothing left and then move on to using disk space, all the while pushing the CPU closer and closer to 100%. This is in fact by design.

SQL Server was designed to use RAM to hide the fact that disk sub systems are traditionally slower and often misconfigured, so do not perform optimally when being used for SQL Server workloads. Fast forward to today where solid state disks and all flash arrays have become more affordable, and therefore companies have started to deploy them for their SQL Servers to speed them up. This gives a good boost in performance, however it is at a pretty expensive cost.

What most people don't know is that in Windows Server, all disk access, regardless of whether it is a read or write operation only, works on a single thread. It doesn't matter how many cores you have in your server or how fast your disk sub system is, Windows can only perform each operation using the same single thread. This leads to a massive bottleneck for systems that read and write data frequently to disk, such as SQL Server.

MaxParallel

Enter MaxParallel from DataCore Software. Working with Microsoft, DataCore have created a piece of software that replaces the driver in Windows that is responsible for talking to the disk sub system. MaxParallel allows Windows to utilise multiple threads to access the disk sub system. This speeds up concurrent data access by removing serial resource contention in multi-core servers, which is an ideal solution for any business-critical high-velocity OLTP (Online Transaction Processing) and real-time analytics workload.

HammerDB is a handy tool that allows you to run a TPC-C benchmark against your database instances (and not just SQL Server). You can specify several different factors to customise your testing, yet the tool has an easy to use interface. It’s also very well documented, with some good instruction on how to set up your own load tests.

Using HammerDB, we carried out extensive testing using a SQL Server with the following specification:

SQL Server 2008 R2 Standard Edition

Windows Server 2016 Standard Edition

CPU: Intel Xeon E5-2680 v2 (2.80GHz)

RAM: 16 GB

HammerDB was configured to try and get each concurrent user to perform one million transactions per minute in a five minute period. Each test increased the number of concurrent users, starting with a single user, increasing to 24. Each test ran three times; we were expecting the results to be relatively the same, but this allowed us to check for consistency.

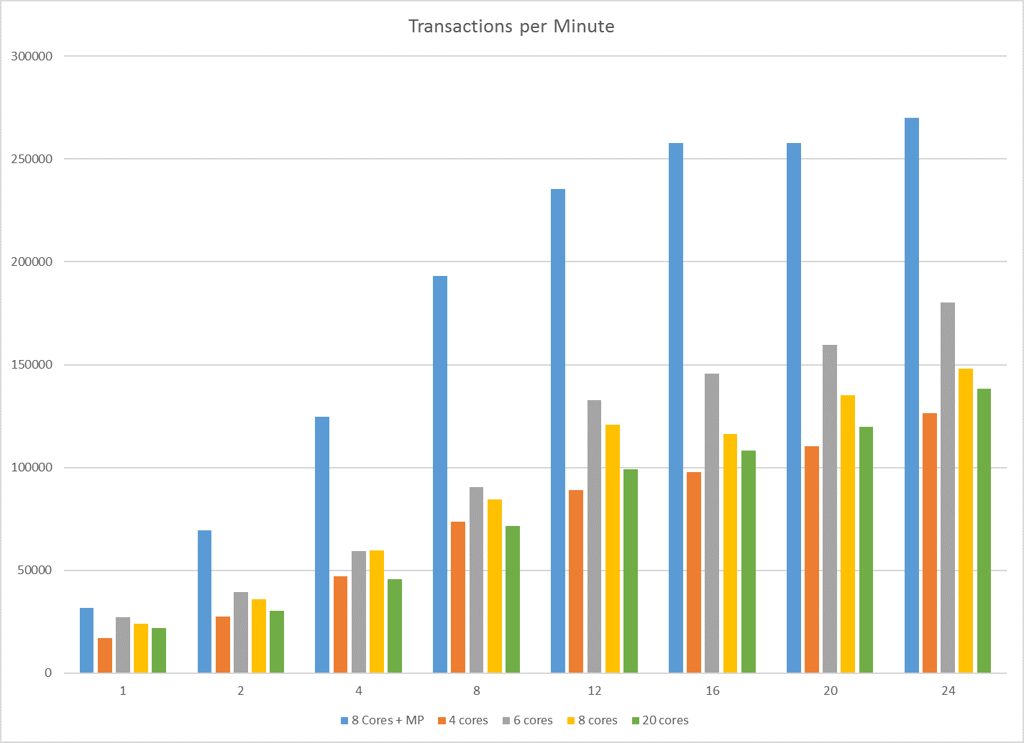

Each test was then repeated against the same SQL Server, but with a different amount of CPU cores. We tested 4, 6, 8 and 20 cores, all without MaxParallel, and compared the results against the same SQL Server with only 8 cores and MaxParallel enabled.

As the graph below shows, the results are impressive. An 8 core SQL Server with MaxParallel enabled is capable of outperforming the same server with 20 cores, without MaxParallel. This means that we could reduce the core count by twelve; allowing better performance and a saving of over £100,000 (approx.) in SQL Server Enterprise Edition core licensing!

But that's a synthetic test I hear you cry! So, in the real world, we deployed MaxParallel on our production CRM and finance system SQL Servers. These are configured like so:

SQL Server 2014 Enterprise Edition

Windows Server 2012 Standard Edition

CPU: Intel Xeon E5-2650 v2 (2.00GHz)

Cores: 6

RAM: 20 GB

The average latency on the CRM data file was between 1000 and 1500ms! That’s well over the 5 – 10ms good performance point. Latency over 20ms on a SQL data file is considered bad by the industry. After installing MaxParallel, the average latency is now an amazing 10-15ms - that's a reduction in latency of 99%.

Month end is a long and extremely heavy workload on the SQL Servers, but since the deployment of MaxParallel the workloads are now finishing almost a whole two hours quicker.

Therefore, we can reduce the number of cores in our SQL Servers; lowering the cost of licensing, while increasing the amount of money we save and the performance of our SQL Servers. All this by spending a fraction of the cost of a single SQL Server Enterprise Edition license for MaxParallel.

We are co-hosting a webinar with DataCore on 13th December to discuss our experiences testing MaxParallel in further detail. To join, please see below:

- By Mike Prince (Senior Technical Consultant)