Technology has seen many advances for developers, from microprocessors to hypervisors and virtual machines. In this blog, we explore the advantages of the latest technological eras - containers and orchestration.

Physical Machines

Step back in time to when developers started writing shared applications that ran on the new Intel x86 microprocessors. These microprocessors spawned personal computers, also known as physical machines, that were hidden away in racks, in air-conditioned rooms.

As each rack was only an inch high and nineteen inches wide it could only contain two machines. Applications couldn’t run on machines directly but needed intermediary software or a host operating system OS to rely on. This included Windows NT Server and, a little later, Linux.

Time progressed and hardware manufacturers were able to squeeze more and more powerful microprocessors into the rack, but not necessarily more machines. It was expensive whenever a new application went into production as businesses usually insisted on high isolation of their applications and so new racks were bought and installed every time. This was the era of the physical machine.

Virtual Machines

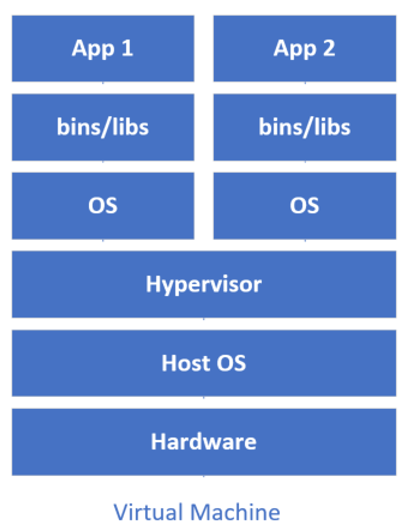

As time went by, new software products appeared, including the hypervisor. The hypervisor was loaded onto the host OS of the physical machine with the purpose of intercepting client calls to hardware, making it appear as if it were many machines.

Each virtual hardware - or virtual machine as known - could host its own distinct guest operating system. A single powerful physical machine could now support many applications each contained in their own OS host, having high virtual isolation from each other. This was the era of the virtual machine - VM.

Containers

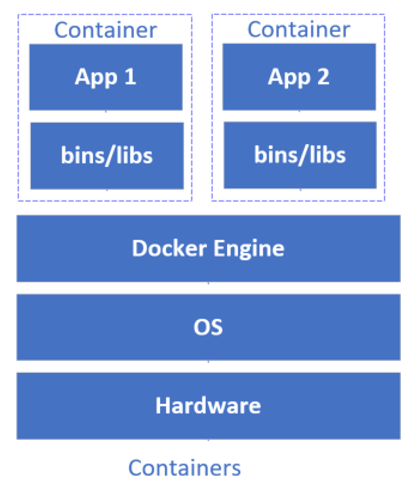

This was a good step forward but had an inefficiency as each VM was required to boot its own separate guest OS, in addition to a host OS. If each application was designed to be hosted in the same underlying host OS, then why the duplication and the corresponding waste of resources?

The answer though was quite simple. Why not just have a single common shared underlying host OS, such as Linux, supporting multiple applications but with isolation between them - removing the requirement for the hypervisor and each guest OS. This was achieved using a namespace feature that assigned a unique identifier to each application process so each could be segregated. To run an application, it must be capable of running on a Linux distribution, sharing the underlying Linux kernel. Enter now the era of the container.

Due to this more efficient architecture - containers are much more lightweight than VMs - multiple containers can be hosted on a single VM or physical machine. Containers execute from an image. The image contains the application, all required dependencies, binaries etc and even custom OS files. Hence, the same container image behaves identically no matter where it is installed and takes seconds to start, update or be removed. Compare this to the VM that can take minutes to boot up.

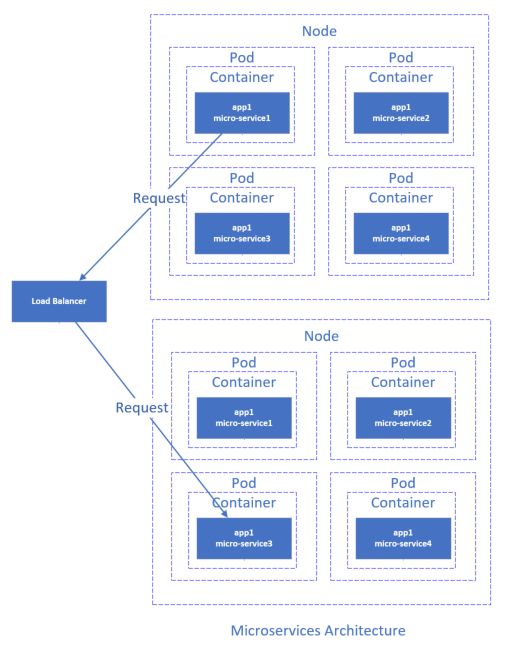

Another advantage is that large monolithic applications can be broken apart into much smaller interconnected micro-services, each installed in a separate container, rather than running as a single unit.

Docker is a well-known and respected container system for applications that run on Linux. Docker can also run on Windows by hosting a cut down Linux VM that runs on the Windows Hyper-V hypervisor.

Orchestration

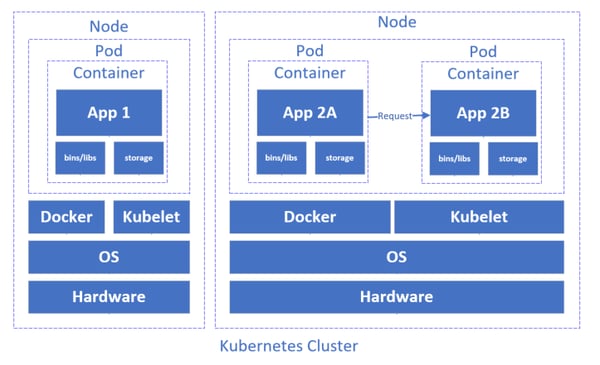

To complete the picture, it is worth mentioning orchestration. At a minimum, orchestration provides life-cycle management of containers. You may require provisioning of many identical applications in multiple containers across multiple machines and load balance incoming requests between them to improve availability. You may also require updates without downtime. Orchestration does this and Docker Swarm is an example of this.

In 2014, Google open sourced its own Linux-based container orchestration technology called Kubernetes, or K8s for short. K8s is cloud-agnostic, very mature and supports Docker. A Kubernetes cluster provides logical Pods that each host a container. One or more Pods are dynamically provisioned onto a Node – a VM or physical machine. In a production system, there must be at least three Nodes.

Many modern applications are built comprised of multiple discrete internal components, all plumbed together, running within a single process. With containers and orchestration, you can now more easily break apart these monolithic applications. You can separate each module into a separate microservice. Each microservice is independently deployed or updated into separate, but connected, containers by orchestration.

What are the advantages?

In summary, the advantages of containers and orchestration are that applications can be deployed, updated or destroyed very easily in seconds (compared to many minutes for a VM), can provide better performance for comparable hardware, support micro-services and are not tied to any proprietary system or cloud vendor.

If you have any queries or would like to discuss this in further detail please don't hesitate to contact us.

- By Nigel Wardle (Application Architect / Cloud Consultant )